Project Description

Phi-2 Model Deployment on Raspberry Pi 5 Using llama.cpp

Overview

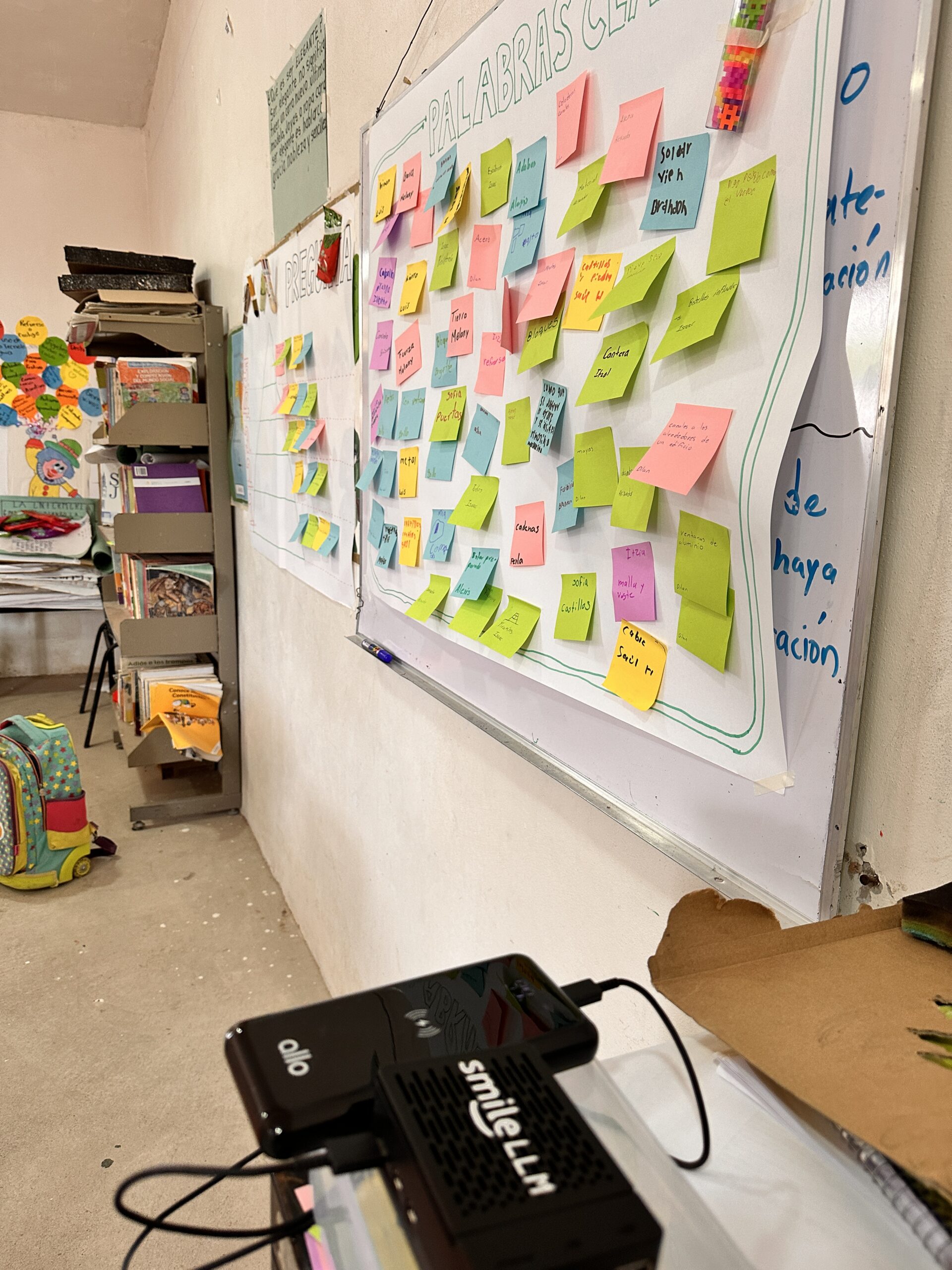

This project involves deploying the Phi-2 large language model (LLM) on a Raspberry Pi 5 with 8GB RAM, utilizing llama.cpp for optimized local inference. The goal is to enable offline AI capabilities that can be used for research and development in AI safety challenges such as bias reduction and toxicity mitigation.

Hardware Specifications

The Raspberry Pi 5 runs on a Broadcom BCM2712 quad-core Arm Cortex A76 processor at 2.4GHz, supported by 8GB of LPDDR4X-4267 SDRAM. This setup provides sufficient computational power for the demands of small-scale model inference, and allows for reasonable performance of the Phi-2 model in a local environment.

Software Framework

llama.cpp is used to run the Phi-2 model, optimizing performance through support for ARM architectures and integer quantization techniques. This project is using a 5-bit quantization of the Phi-2 model (Phi-2_Q5_K_M). This method reduces the memory footprint of the model, allowing it to perform well within the limited RAM of the Raspberry Pi. It achieves this by reducing the precision of the numerical data that represents the model’s parameters, thereby decreasing the amount of data processed and stored during inference.

Phi-2 Model Details

Phi-2 is a Transformer with 2.7 billion parameters, designed for applications in QA, chat, and code generation tasks. It operates within the Transformers library (version 4.37 and later), ensuring compatibility on the Raspberry Pi 5.

Architecture: a Transformer-based model with next-word prediction objective

Context length: 2048 tokens

Dataset size: 250B tokens, combination of NLP synthetic data created by AOAI GPT-3.5 and filtered web data from Falcon RefinedWeb and SlimPajama, which was assessed by AOAI GPT-4.

Training tokens: 1.4T tokens

GPUs: 96xA100-80G

Training time: 14 days

Intended Use and Applications

Deploying Phi-2 on the Raspberry Pi 5 is aimed at educational and experimental uses in settings without cloud dependencies. The model’s capabilities allow for use and demonstration of AI in standalone or limited connectivity environments.

Limitations and Considerations

Performance: The inference performance on the Raspberry Pi 5 is about 5 tokens per second, which allows for enough performance for a real-time chatbot (albeit a little slow on the streaming response).

Accuracy and Reliability: Outputs from Phi-2 should be treated as suggestions, as the model can produce incorrect codes or factual errors.

Language and Bias: The model is optimized for standard English and may inadvertently exhibit biases from its training data.

Conclusion

Implementing Phi-2 on Raspberry Pi 5 showcases the feasibility of running advanced AI models in a compact and local setup. This project enhances access to AI tools without the need for extensive infrastructure.